Epic Games recently released Unreal Engine 5 and I thought it was time that I wrote a series about using Blueprints to show the power of UE5. In this series of posts, I am going to start from basics, introducing you to Blueprints and then build us up to using Blueprint to create our own games.

What I’m not going to do is recap how to install Unreal. I have seen a lot of Unreal tutorials where the first twenty minutes detail the download and installation process, unnecessarily repeating Epic Games content. You can get the download here and follow the instructions in the video about installing Unreal.

Note: You will require an Epic Games account to install Unreal.

What are Blueprints?

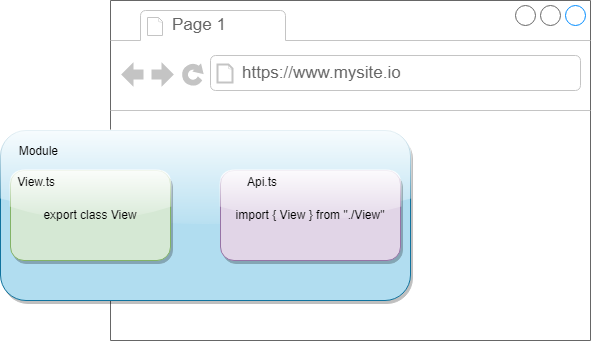

Unreal Engine has a visual scripting system called Blueprints. This is a system that allows us to use something called a node-based interface to create and configure gameplay elements.

These nodes allow us to do things such as raise and react to events, use variables, or perform actions.

Getting started

Once you have Unreal installed, you launch it from the Epic Games browser so open that up and click the Launch button to open Unreal.

Note: The button is a drop-down because it is possible to have different versions installed.

Once Unreal has started, you will see the Unreal Project Browser. From here, you can either open an existing project or you can create a new one. Click the Games image to open the games options up.

In honour of all good programming tutorials, we will start off with a Hello World project so let’s change the project name to HelloWorld.

We can choose from a number of different templates such as creating a blank project, a third-person shooter and so on. The different choices give us some different default operations such as a player character in the case of a FPS. For the moment, we are going to choose a blank template.

I’m going to leave all the other options the same. The Blueprint button simply means that we initially want to create our project as a Blueprint project rather than a C++ one. It is possible to add Blueprint to a C++ project, and C++ to a Blueprint project if we need to, later on. The Starter Content gives us some initial content so that we have something to view. I have unchecked this because I want to start completely fresh.

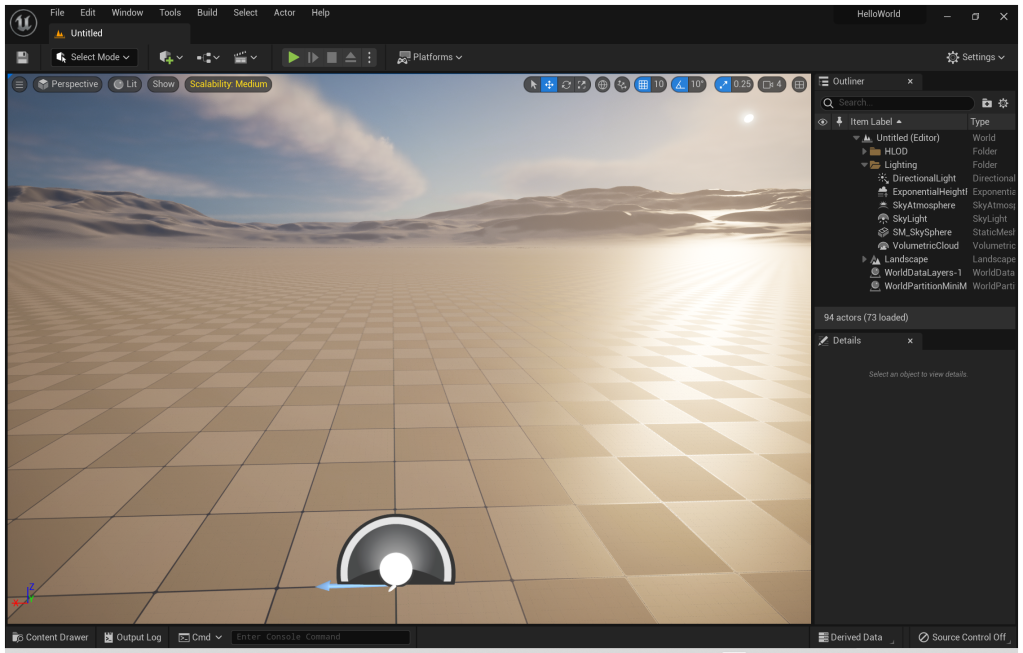

Click Create and the project is created. It may take a few seconds, there’s nothing to worry about if there is a slight delay. Even though we said we wanted no content, the project will have a landscape and some lighting already created for us.

At this stage, most tutorials I have looked at spend time covering all of the windows that make up the editor. Rather than doing that, I will introduce windows as we encounter them.

Navigation in the Viewport

The main area of the window, the part showing the landscape, is known as the Viewport. By default, Unreal opens the perspective viewport. In later tutorials, we will look at how to use different viewport configurations for more fine-grained control of models.

If you hold down your left mouse button and move the mouse, the image in the viewport rotates in the appropriate direction. You can use the arrows to zoom in or out, as well as to shift the viewport left or right.

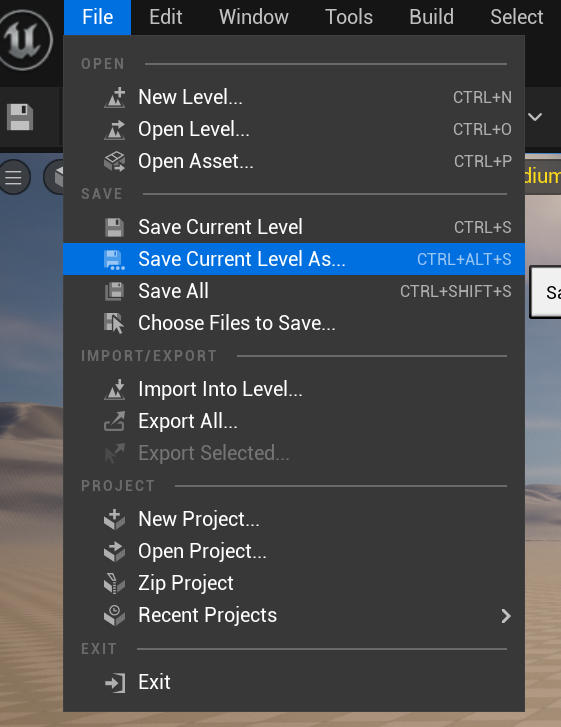

Before we make any changes, we should save our level. To do this, choose Save Current Level As... from the File menu.

When you do this, you will be prompted to save the level. I named my level Earth.

Adding content

Before I add my own content to this project, I want to remove most of the default content that was added when the project was created. On the right-hand side of the screen, there is an Outliner tab that contains a reference to every item that has been added. I am going to get rid of most of the visible items so I select the following items in the Outliner (click the Delete button on your keyboard to delete them). To remove all of the landscape items, you will have to expand the landscape folder.

If you were wondering why we didn’t remove the contents from the HLOD folder, this is because these are items that were automatically created by Unreal to improve runtime performance. They do this by replacing separate meshes with single elements that can be rendered in the distance. The entries here were created from the landscape elements, and were designed to reduce the amount of detail that Unreal has to render at long distances. When we build this level, they will be automatically removed for us because the landscape elements they were based on have been removed.

As soon as you have removed the elements, make sure to save the project. You can use the File menu and click Save All, or you can use the shortcut of clicking Ctrl + Shift + S.

Making our world

I did promise that we would do a Hello World exercise and, true to my word, the world we are going to “do” is Earth. For the purposes of this tutorial, I am going to download the “2K Earth daymap” image to use on the sphere so go ahead and download the image from here.

Adding the Earth texture is straightforward. Click the Content Drawer at the bottom of the screen.

The content browser window is displayed and we are going to click Import to start the process of importing the world image.

Select the world image in the file dialog and this loads the map into the project as a texture.

While we have loaded the Earth texture, we want to convert this into a material that we can use when we want to render our final Earth out. Right-click on the texture in the content browser and choose Create Material from the context menu.

Save the project to make sure no work has been lost, then build the levels by selecting the Build menu and then clicking Build All Levels.

Unreal will prompt us to ask what operation we want to perform at this point. I am going to choose to delete the HLOD entries:

Make sure that all of the content is selected in the Save Content dialog and click Save Selected.

Depending on the power of your machine, the Build process may take a little while. Please be patient while it performs the build.

Productivity Hint: As we work with Unreal, we will spend a lot of time in the content browser window. If you want the browser always available, you can add the content browser as a docked item on your page. To do this, choose the Content Browser option from the Window menu.

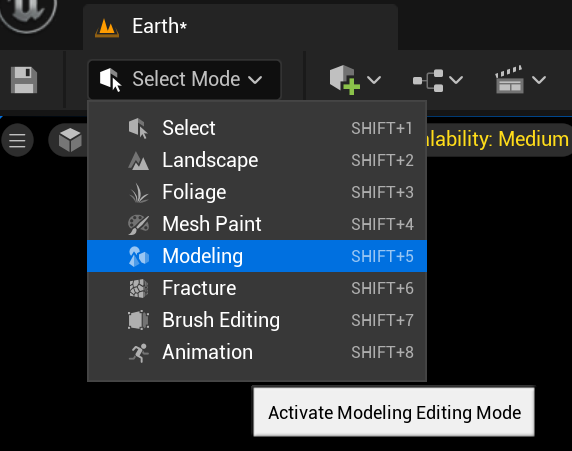

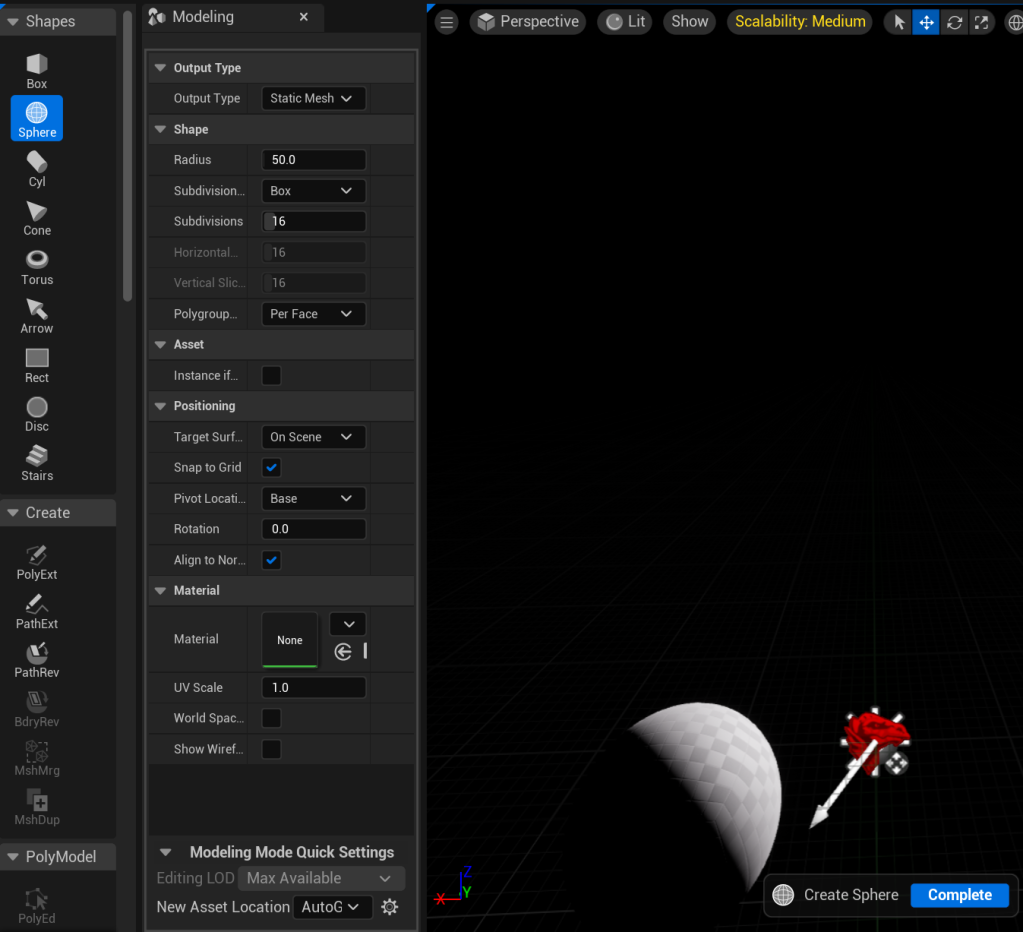

We have one final thing we want to do before we start creating our Blueprint. I want to add the Earth material onto a sphere, which means that I want to add a sphere onto the screen. I am going to switch the viewport into modeling mode. In the toolbar, I click the Select Mode dropdown and choose Modeling from the list of available options.

The left hand side of the screen shows me a vertical toolbar containing the model operations I can perform with a modeling pane beside it. Choose the Sphere in the Shapes section.

Left-click anywhere in the viewport to finish adding the sphere, then click the Complete button. If you don’t click Complete, Unreal lets you add more spheres. Don’t forget to save your work.

Adding blueprints

We are building a world, so it would be a good idea for our world to rotate. What’s the point of having a sphere if we can’t see all sides of it?

We are going to open the blueprint editor, using the Blueprint button in the toolbar.

Note: Every level you create has a blueprint already associated with it. You cannot create a new level blueprint, you can just interact with the one that is already there.

As we are going to create a blueprint for our rotating planet, we click the New Empty Blueprint Class... option in the menu. In future tutorials, I will show other ways to create blueprints. What we should see now is a dialog that allows us to select the parent class.

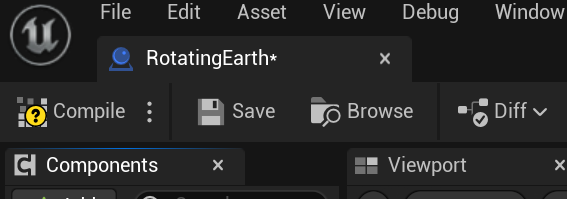

At this point, it is worth pointing out that a blueprint class (we shorten it to blueprint), is just an asset that we can use to add functionality. These build on top of existing gameplay classes, so they inherit features of those classes and allow us to override them to add functionality and abilities that are specific to our needs. This should lead you to understand what we are going to pick here is the base functionality that we are going to use, so this will be the parent of our blueprint. As we are going to be working with an object that can be placed in the level, we are going to choose Actor as our parent class. A dialog will pop up asking us to name our blueprint. For this example, I have chosen RotatingEarth as the name.

The Blueprint editor will now be displayed. This is a separate window, so you can move it to another monitor while you are working. Again, I’m not going to explain the different parts of this window. In future tutorials, we will delve into the different parts as we need them.

I am going to start this blueprint by adding the sphere into my blueprint. This is the thing I want to rotate, so it is the thing that needs to be added. To do this, I am going to click the Add button and select the Static Mesh option from the dropdown. This will let me add the mesh I want to rotate.

To add the rotation capability, I click Add again but this time, I am going to search for Rotating Movement.

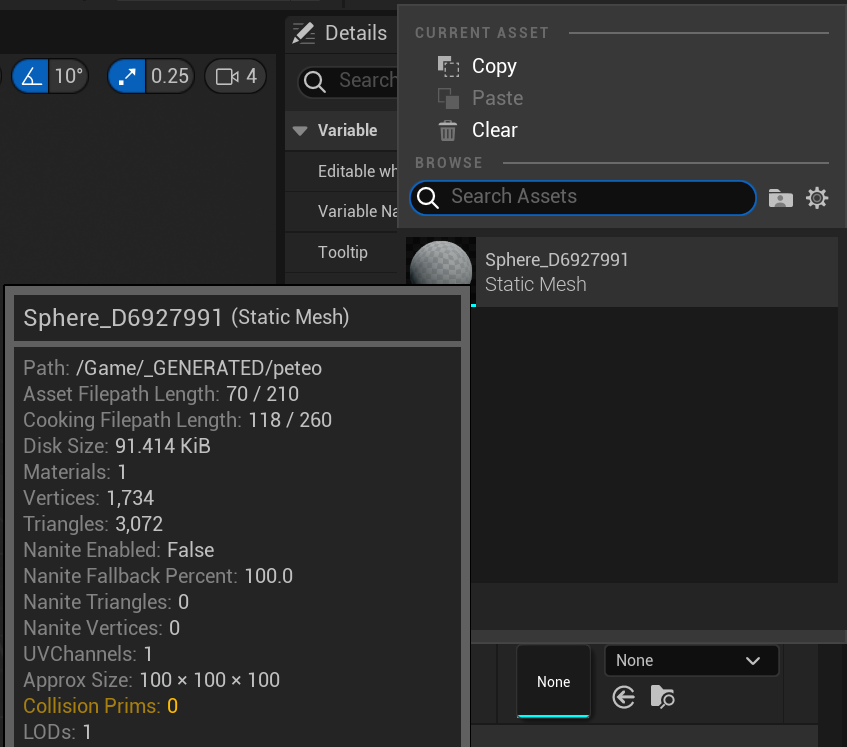

I now have two components added to my blueprint. Something I haven’t done yet is add my sphere to the static mesh. Click on the StaticMesh in the Components window and the details for the component will appear in the Details view. In the Static Mesh, click the dropdown with None in it (I’ve highlighted it in red below).

The sphere mesh will have a random name, but as it is the only one in my level, it is easy to identify.

In the Materials section immediately below, select the None dropdown and choose the material we created in the section above.

Before we can add our rotating earth, we need to compile our Blueprint so that it will be usable. Click the Compile button in the toolbar.

After compiling the Blueprint, close the Blueprint editor window and save your work.

Note: If you close Unreal and open it again, you may still see default content. If you do, this means that the Earth level isn’t open, so you need to open it using File > Open Level to open the level editor, where you can select the Earth level.

The final steps

Even though we added a rotating Earth Blueprint using the Sphere mesh we added, you will see that the sphere in the viewport doesn’t look to be a rotating Earth. To fix this, we start by doing something that we might consider to be counterproductive. Select the sphere mesh either by clicking on it in the viewport, or in the outliner, and delete it.

Adding the rotating planet is a simple matter of showing the content browser using the Content Drawer if you don’t have the browser docked in your editor. Click the Blueprints folder to show the rotating Earth.

Select the RotatingEarth actor and drag it into the viewport.

Build All Levels command in the Build menu. The same build screens will appear that we saw earlier in this tutorial.

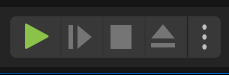

You’re ready to test the level, so click the play button in the toolbar, then use the mouse and W, A, S and D keys to move around in the viewport.

Note: I know that the earth texture doesn’t look quite right. There are steps that we would normally take to work with meshes and spheres that we haven’t undertaken in this tutorial. In a future tutorial, we will look at how to properly texture spheres.

I hope you enjoyed this tutorial. This is the first in a long series I have planned. Those who have read who are worried I won’t be continuing with my series on TypeScript, please don’t worry. I’m still continuing with those tutorials as well.

I have added the repository for this tutorial to Github. Any questions, please feel free to ask in the comments.